Beyond Self-Driving: Exploring Three Levels of Driving Automation

ICCV 2025 Tutorial

October 19, 8:50 - 12:10 HST

Hawaii Convention Center, Room 308A

Introduction

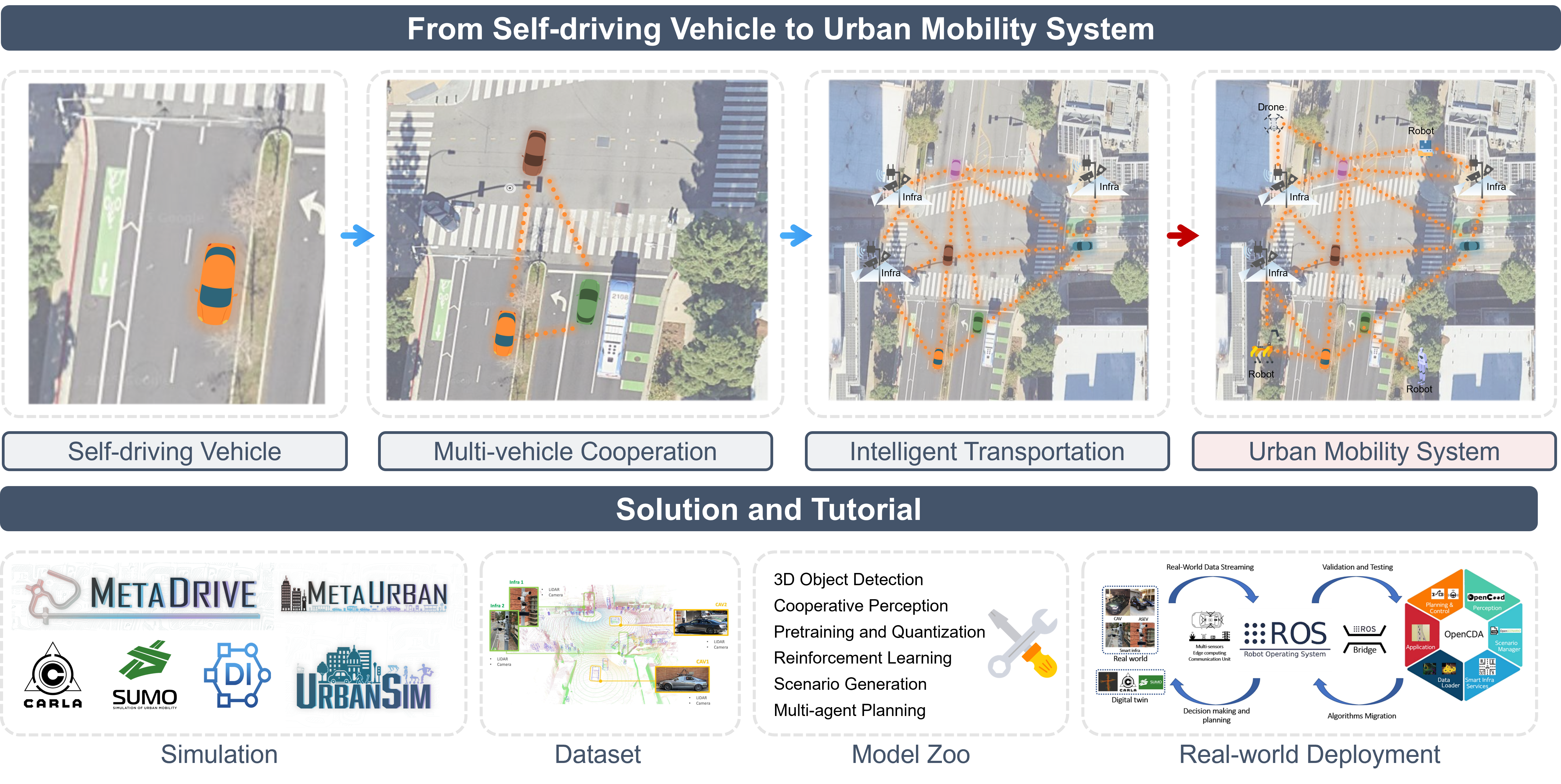

Self-driving technologies have demonstrated significant potential to transform human mobility. However, single-agent systems face inherent limitations in perception and decision-making capabilities. Transitioning from self-driving vehicles to cooperative multi-vehicle systems and large-scale intelligent transportation systems is essential to enable safer and more efficient mobility. However, realizing such sophisticated mobility systems introduces significant challenges, requiring comprehensive tools and models, simulation environments, real-world datasets, and deployment frameworks. This tutorial will delve into key areas of driving automation, beginning with advanced end-to-end self-driving techniques such as vision-language-action (VLA) models, interactive prediction and planning, and scenario generation. The tutorial emphasizes V2X communication and cooperative perception in real-world settings, as well as datasets including V2X-Real and V2XPnP. The tutorial also covers simulation and deployment frameworks for urban mobility, such as MetaDrive, MetaUrban, and UrbanSim. By bridging foundational research with real-world deployment, this tutorial offers practical insights into developing future-ready autonomous mobility systems.

Schedule

| Time (GMT-10) | Programme |

|---|---|

| 08:50 - 09:00 | Opening Remarks |

| 09:00 - 09:30 |

Foundation Models for Autonomous Driving: Past, Present, and Future Foundation models are transforming autonomous driving by unifying perception, reasoning, and planning within a single multimodal learning framework. This tutorial introduces how recent advances in generative AI, spanning vision, language, and world modeling, enable autonomous systems to generalize beyond closed datasets and handle long-tail real-world scenarios. We begin by revisiting the limitations of modular and hybrid AV pipelines and discuss how foundation models bring unified optimization, contextual reasoning, and improved interpretability. The tutorial surveys state-of-the-art vision-language-action frameworks such as LINGO-2, DriveVLM, EMMA, ORION, and AutoVLA, highlighting how language serves as both an interface for human interaction and a medium for model reasoning and decision-making. We further explore emerging techniques in reinforcement fine-tuning, alignment of actions with linguistic reasoning, and continual learning for safe, efficient post-training. Zhiyu Huang is a postdoctoral scholar at the UCLA Mobility Lab, working under the guidance of Prof. Jiaqi Ma. He was previously a research intern at NVIDIA Research's Autonomous Vehicle Group and a visiting student researcher at UC Berkeley's Mechanical Systems Control (MSC) Lab. He received his Ph.D. from Nanyang Technological University (NTU), where he conducted research in the Automated Driving and Human-Machine System (AutoMan) Lab under the supervision of Prof. Chen Lyu.

Zhiyu Huang

Postdoctoral Researcher, UCLA |

| 09:30 - 10:00 |

Towards End-to-End Cooperative Automation with Multi-agent Spatio-temporal Scene Understanding Vehicle-to-Everything (V2X) technologies offer a promising paradigm to mitigate the limitations of constrained observability in single-vehicle systems through information exchange. However, existing cooperative systems are limited in cooperative perception tasks with single-frame multi-agent fusion, leading to a constrained scenario understanding without temporal cues. This tutorial will explore how cooperative systems can achieve a comprehensive spatio-temporal scene understanding and be jointly optimized for the full autonomy stack: perception, prediction, and planning. The tutorial will begin by introducing V2XPnP-Seq, the first real-world sequential dataset supporting all V2X collaboration modes (vehicle-centric, infrastructure-centric, V2V, and I2I). Attendees will learn how to leverage this dataset and its comprehensive benchmark, which evaluates 11 distinct fusion methods, to validate their own cooperative models. Next, the tutorial will delve into V2XPnP, a novel intermediate fusion end-to-end framework that operates within a single communication step. Compared to traditional multi-step strategies, this framework achieves a 12% gain in perception and prediction accuracy while reducing communication overhead by 5×. Training such a complex multi-agent, multi-frame, and multi-task system, however, poses significant challenges. To address this, TurboTrain will be presented, an efficient training paradigm that integrates spatio-temporal pretraining with balanced fine-tuning. Participants will gain insights into how this approach achieves 2× faster convergence and improved performance, while preserving the deployment of task-agnostic spatio-temporal features. Finally, the discussion will extend to the crucial task of planning. Here, Risk Map as Middleware (RiskMM) is introduced as an interpretable cooperative end-to-end planning framework that explicitly models agent interactions and risks. This approach enhances the transparency and trustworthiness of autonomous driving systems. Through this progressive exploration, attendees will gain a holistic understanding of the state-of-the-art in multi-agent cooperative systems and will be equipped with the knowledge to build, train, and evaluate their own end-to-end spatio-temporal V2X solutions. Zewei Zhou is a Ph.D. student in the UCLA Mobility Lab at the University of California, Los Angeles (UCLA), advised by Prof. Jiaqi Ma. He received his master’s degree from Tongji University with the honor of Shanghai Outstanding Graduate, and conducted research at the Institute of Intelligent Vehicles (TJU-IIV) under the supervision of Prof. Yanjun Huang and Prof. Zhuoping Yu.

Zewei Zhou

PhD Candidate, UCLA |

| 10:00 - 10:30 |

Bridging Simulation and Reality in Cooperative V2X Systems Bridging the gap between simulation and deployment for cooperative V2X perception demands algorithms and systems that remain robust to bandwidth limits, latency spikes, and localization/synchronization errors—yet are also reproducible and scalable for research. This tutorial surveys an end-to-end sim-to-real pipeline and proposes design patterns that make cooperative perception practical “from sims to streets.” We begin with OpenCDA-ROS, which synthesizes ROS’s real-world messaging with the OpenCDA ecosystem to let researchers migrate cooperative perception, mapping/digital-twin, decision-making, and planning modules bidirectionally between simulation and field platforms, thereby narrowing the sim-to-real gap for CDA workflows. On this foundation, we present CooperFuse, a real-time late-fusion framework that operates on standardized detection outputs—minimizing communication overhead and protecting model IP—while adding multi-agent time synchronization and HD-map LiDAR-IMU localization. CooperFuse augments score-based fusion with kinematic/dynamic and size-consistency priors to stabilize headings, positions, and box scales across agents, improving 3D detection under heterogeneous models at smart intersections. Moving beyond detections, V2X-ReaLO is a ROS-based online framework that integrates early, late, and intermediate fusion and—critically—delivers the first practical demonstration that exchanging compressed BEV feature tensors among vehicles/infrastructure is feasible in real traffic, with measured bandwidth/latency constraints. It also extends V2X-Real into synchronized ROS bags (25,028 frames; 6,850 annotated keyframes), enabling fair, real-time evaluation across V2V/V2I/I2I modes under deployment-like conditions. Zhaoliang Zheng is a Fifth-year Ph.D candidate in Electrical and Computer Engineering at UCLA Mobility Lab, advised by Professor Jiaqi Ma. He received his M.S degree in UCSD, supervised by Prof. Falko Kuester and Prof. Thomas Bewley. His research focuses on cooperative perception, multi-sensor fusion, and real-time learning systems for mobile robots.

Zhaoliang Zheng

PhD Candidate, UCLA |

| 10:30 - 10:40 | Coffee Break |

| 10:40 - 11:20 |

From Pre-Training to Post-Training: Building an Efficient V2X Cooperative Perception System Recent advances in cooperative perception have demonstrated significant performance gains for autonomous driving through Vehicle-to-Everything (V2X) communication. However, real-world deployment remains challenging due to high data requirements, prohibitive training costs, and strict real-time inference constraints under bandwidth-limited conditions. This tutorial provides a full-stack perspective on designing efficient V2X systems across the learning and deployment pipeline. In the first part, we will introduce data-efficient and training-efficient pretraining strategies, including CooPre (collaborative pre-training for V2X) and TurboTrain (multi-task multi-agent pre-training). In the second part, we will focus on inference-efficient and resource-friendly deployment techniques, highlighting QuantV2X, a fully quantized cooperative perception system. Finally, the tutorial will conclude with a hands-on coding session, where we will walk over with the audience with core components of an efficient V2X ecosystem, bridging algorithmic design with practical deployment. Seth Z. Zhao is a second-year Ph.D. student in Computer Science at UCLA, advised by Professors Bolei Zhou and Jiaqi Ma. He previously earned his M.S. and B.A. in Computer Science from UC Berkeley, where he conducted research under the guidance of Professors Masayoshi Tomizuka, Allen Yang, and Constance Chang-Hasnain.

Seth Z. Zhao

PhD Candidate, UCLA |

| 11:20 - 12:00 |

Building Scalable, Human-Centric Physical AI Systems Large language models and generative models have made remarkable progress by scaling with internet-scale data. In contrast, physical AI, intelligent agents that perceive, decide, and act in the real world, still lags behind. Two key challenges lie in the mismatch between how robots learn and how internet data is created, as well as the critical safety concerns that arise when interacting with humans in the physical world. In this tutorial, I will discuss how to build scalable, human-centric physical AI systems by rethinking both the usage of data and the modeling of humans. I will introduce a three-pronged recipe for scalable, human-centric robot learning: 1) Simulation, which provides a controllable and efficient environment for training interactive behaviors at scale; 2) Human-created videos, which capture the visual complexity and semantic richness of the real world that simulations often lack; and 3) Human modeling, which brings realistic dynamics into simulated environments, helping to improve the safety and social compliance of trained robots. Finally, I will discuss how these components can be harmonized to enable the next generation of scalable, generalizable physical AI systems. Wayne Wu is a Research Associate in the Department of Computer Science at the University of California, Los Angeles, working with Prof. Bolei Zhou. Prior to this, he was a Research Scientist at Shanghai AI Lab, where he led the Virtual Human Group. He also served as a Visiting Scholar at Nanyang Technological University, collaborating with Prof. Chen Change Loy. He earned his Ph.D. in June 2022 from the Department of Computer Science and Technology at Tsinghua University.

Wayne Wu

Research Associate, UCLA |

| 12:00 - 12:10 | Ending Remarks |

Resources

| Project | Description | Link |

|---|---|---|

| AutoVLA | A Vision-Language-Action Model for End-to-End Autonomous Driving with Adaptive Reasoning and Reinforcement Fine-Tuning. | github.com/ucla-mobility/AutoVLA |

| Awesome-VLA-for-AD | A collection of resources for Vision-Language-Action models for autonomous driving. | github.com/worldbench/awesome-vla-for-ad |

| OpenCDA | An open co-simulation-based research/engineering framework integrated with prototype cooperative driving automation pipelines. | github.com/ucla-mobility/OpenCDA |

| V2X-Real | The first large-scale real-world dataset for Vehicle-to-Everything (V2X) cooperative perception. | mobility-lab.seas.ucla.edu/v2x-real |

| V2XPnP | The first open-source V2X spatio-temporal fusion framework for cooperative perception and prediction. | mobility-lab.seas.ucla.edu/v2xpnp |

| QuantV2X | A fully quantized multi-agent perception pipeline for V2X systems. | github.com/ucla-mobility/QuantV2X |

| TurboTrain | A training paradigm for cooperative models that integrates spatio-temporal pretraining with balanced fine-tuning. | github.com/ucla-mobility/TurboTrain |

| MetaDrive | An Open-source Driving Simulator for AI and Autonomy Research. | github.com/metadriverse/metadrive |

| MetaUrban | An embodied AI simulation platform for urban micromobility | github.com/metadriverse/metaurban |

| UrbanSim | A large-scale robot learning platform for urban spaces, built on NVIDIA Omniverse. | github.com/metadriverse/urban-sim |

Acknowledgments

This tutorial was supported by the National Science Foundation (NSF) under Grants CNS-2235012, IIS-2339769, and TI-2346267; the NSF POSE project DriveX: An Open-Source Ecosystem for Automated Driving and Intelligent Transportation Research; the Federal Highway Administration (FHWA) CP-X project Advancing Cooperative Perception in Transportation Applications Toward Deployment; and the Center of Excellence on New Mobility and Automated Vehicles.